CCTV

Ascend Security Services will recommend, design and install C.C.T.V. systems, to suit all types of needs, from covert surveillance to conspicuous monitoring.

Studies show that the mere presence of cameras cuts losses by two thirds in offices and retail settings. Ascend Digital Video Recorders (DVRs) feature:

- User-friendly operation (Windows based)

- Search capability while Recording

- Motion detection capability

- Remotely view sites “Live”

- Added protection in the event of premises liability law suites

- Peace of mind and deterrence/prevention in one small package

Monitor, Deter, Protect

BIOMETRICS

Ascend Security Services, will recommend, design and install the latest secure entry technology - Biometric Systems. The Biometric devices scan the fingerprint or retina of the eye to allow only authorized personnel to gain access to secure areas. A hierarchy of authorization can also be programmed into the Biometrics System that will allow access depending on security clearance status.

Terms & Definitions

Questions & Answers (College of Knowledge) Use the one you have from previous write ups.

Terms & Definitions

A digital image is represented by a matrix of values, where the value is a function of the information corresponding to that point on the image. A single unit in the matrix is a "picture element", or pixel. A grayscale pixel is normally an eight bit byte, color is 24 (RGB). A display is described by the number of pixels along the width and height of the image, 720x486 for example. "Real time" recording is 30FPS (frames per second) which when viewed in average lighting conditions the flicker rate between frames will not be noticeable. As frame rate decreases, the flicker will become more noticeable. The response of a TV signal begins to deteriorate above a certain rate.

Bandwidth (BW)

The range of frequencies a circuit will respond to or pass through. It may also be the difference between the highest and lowest frequencies of a signal. Bandwidth places an upper limit on the horizontal resolution, or number of lines that can be displayed.

Resolution

Refers to the capability of the imaging system to produce the fine detail in the picture. The upper limit in digital is the total number of pixels.

As picture detail increases, the response of the imaging system will eventually deteriorate.

Compression As a Ratio

When a manufacturer specifies a compression ratio without any other supporting data, you should be wary. Depending on how the manufacturer calculated the size of uncompressed video, how the numbers were rounded, and how the compressed stream was measured, the ratio may be anywhere from fairly accurate to completely absurd. Whenever possible, find out the data rate in megabytes per second and calculate the ratio yourself. If the data rate is unavailable, make sure it was calculated based on 4:2:2 CCIR 601 encoding practices and that the frame/field size and rate match the above.

CODEC

A video codec is software that can compress a video source (encoding) as well as play compressed video (decompress).

The term intra coding refers to the fact that the various lossless and lossy compression techniques are performed relative to information that is contained only within the current frame, and not relative to any other frame in the video sequence. In other words, no temporal processing is performed outside of the current picture or frame. This 4:2:0 contains one quarter of the chrominance information. Although MPEG-2 has provisions to handle the higher chrominance formats for professional applications, most consumer level products will use the normal 4:2:1

Artifacts

In the video domain, artifacts are blemishes, noise, snow, spots, whatever. When you have an image artifact, something is wrong with the picture from a visual standpoint. Don't confuse this term with not having the display properly adjusted. For example, if the hue control is set wrong, the picture will look bad, but this is not an artifact. An artifact is some physical disruption of the image.

Compression Ratio

Compression ratio is a number used to tell how much information is squeezed out of an image when it has been compressed. For example, suppose we start with a 1 MB image and compress it down to 128 KB. The compression ratio would be:1,048,576 / 131,072 = 8

This represents a compression ratio of 8:1; 1/8 of the original amount of storage is now required. For a given compression technique - MPEG, for example - the higher the compression ratio, the worse the image looks. This has nothing to do with which compression method is better, for example JPEG vs. MPEG. Rather, it depends on the application. A video stream that is compressed using MPEG at 100:1 may look better than the same video stream compressed to 100:1 using JPEG

DCT

This is short for Discrete Cosine Transform, used in the MPEG , H.261 , and H.263 video compression algorithms Discrete cosine transform is a lossy compression algorithm that samples an image at regular intervals, analyzes the frequency components present in the sample, and discards those frequencies which do not affect the image as the human eye perceives it. DCT is the basis of standards such as JPEG, MPEG, H.261, and H.263..

Decimation

When a video signal is digitized so that 100 samples are produced, but only every other one is stored or used, the signal is decimated by a factor of 2:1. The image is now 1/4 of its original size, since 3/4 of the data is missing. If only one out of five samples were used, then the image would be decimated by a factor of 5:1, and the image would be 1/25 its original size. Decimation, then, is a quick-and-easy method for image scaling.

Decimation can be performed in several ways. One way is the method just described, where data is literally thrown away. Even though this technique is easy to implement and cheap, it introduces aliasing artifacts

Flicker

Flicker occurs when the frame rate of the video is too low. It's the same effect produced by an old fluorescent light fixture. The two problems with flicker are that it's distracting and tiring to the eyes.

Huffman Coding

Huffman coding is a method of data compression. It doesn't matter what the data is -- it could be image data, audio data, or whatever. It just so happens that Huffman coding is one of the techniques used in JPEG, MPEG, H.261, and H.263 to help with the compression. This is how it works. First, take a look at the data that needs to be compressed and create a table that lists how many times each piece of unique data occurs. Now assign a very small code word to the piece of data that occurs most frequently. The next largest code word is assigned to the piece of data that occurs next most frequently. This continues until all of the unique pieces of data are assigned unique code words of varying lengths. The idea is that data that occurs most frequently is assigned a small code word, and data that rarely occurs is assigned a long code word, resulting in space savings.

Motion Estimation

Motion estimation is trying to figure out where an object has moved to from one video frame to the other. Why would you want to do that? Well, let's take an example of a video source showing a ball flying through the air. The background is a solid color that is different from the color of the ball. In one video frame the ball is at one location and in the next video frame the ball has moved up and to the right by some amount. Now let's assume that the video camera has just sent the first video frame of the series. Now, instead of sending the second frame, wouldn't it be more efficient to send only the position of the ball? Nothing else moves, so only two little numbers would have to be sent. This is the essence of motion estimation. By the way, motion estimation is an integral part of MPEG , H.261 , and H.263 .

Resolution

This is the basic measurement of how much information is visible for an image. It is usually described as "h" x "v". The "h" is the horizontal resolution (across the display) and the "v" is the vertical resolution (down the display). The higher the numbers, the better, since that means there is more detail to see. If only one number is specified, it is the horizontal resolution.

Video compression

Digital signal compression is the process of digitizing an analog television signal by encoding a TV picture to "1s" and "0s". In video compression, certain redundant details are stripped from each frame of video. This enables more data to be squeezed through a coaxial cable, into a satellite transmission, or a compact disc. The signal is then decoded for playback. In simpler terms, "video compression is like making concentrated orange juice: water is removed from the juice to more easily transport it, and added back later by the consumer." Heavy research and development into digital compression has taken place over the past years because of the enormous advantages that digital technology can bring to the security, telecommunications, and computer industries. The use of compressed digital over analog video allows for lower video distribution costs, increases the quality and security of video, and allows for interactivity.

Currently, there are a number of compression technologies that are available. For example MPEG-1, MPEG-2, MPEG-4, Motion JPEG, H.261, and Divx, . Digital compression can take these many forms and be suited to a multitude of applications. Each compression scheme has its strengths and weaknesses because the codecs you choose will determine how good the images will look, how smoothly the images will flow and what is the needed video data rate for usable picture quality redundant information. The foremost task then is to find less correlated representation of the image. A common characteristic of most images is that the neighboring pixels are correlated. Two fundamental components of compression are redundancy and irrelevancy reduction. Redundancy reduction aims at removing duplication from the signal source (image/video). Irrelevancy reduction omits parts of the signal that will not be noticed by the signal receiver, namely the Human Visual System (HVS).

Introduction to compression formats

JPEG -Joint Photographic Experts Group MPEG-Motion Picture Experts Group

Both meet under the ISO (International Standards Organization) to generate standards for digital video and audio compression.

JPEG was developed to compress stills, where the algorithm doesn't have to be very fast.

Motion JPEG (M-JPEG) is best suited to video editing and isn't as efficient as MPEG 1 or 2. MPEG is "block-based" and uses motion compensated prediction, more on this later. MPEG pushes the limits of economical VLSI technology and you might not get what you pay for in terms of picture quality of compaction efficiency.

MPEG-1 was designed for up to 1.5Mbit/sec and is based on CD-ROM video applications (a popular standard for video on the internet too). This was the first real-time compression format for audio and video, resolution is typically 352x240. It was finalized in 1991 Ideal compression ratio is 6:1

MPEG-2 is for bitrates from 1.5 to 15Mbit/sec with the target rate being between 4 and 9Mb/s. This is the standard for DVD, it is based on MPEG-1 but the most significant enhancement is its ability to efficiently compress interlaced video. It was finalized in 1994.

MPEG-4 is the standard for multimedia and web compression. It is an object based compression (software image construct descriptors), individual objects within a scene are tracked separately and compressed together to create a file. Developers can isolate and control parts of the scene independently. It is a very efficient compression that is able to deal with a wide range of bit-rates. The final license agreement was signed in July of 2002. We can expect MPEG-4 to eventually replace prior MPEG formats.

Wavelet transformation is the latest cutting edge technology. It works by transforming images into frequency components. The process involves the whole image rather than smaller pieces (blocks) of data. Since the wavelet size is well defined mathematically the frame size remains small. As the frequencies are being analyzed, the scaling may vary as well. This makes the format very well suited to signals with spikes or discontinuities (rapid motion or complex scenes). Wavelet based coding provides substantial improvements in picture quality at the highest compression ratios. Wavelets outperform the coding schemes based on DCT as there is no need to block the input image and no artifacts are induced at high compression ratios. All the top contenders at the JPEG-2000 standard were wavelet based compression algorithms. It is considered to be the best method for enlarging stills from the video stream.

Wavelets:

Wavelet Technology

- Wavelet transforms are making possible real-time video compression and decompression (codec) in video recording.

- Wavelet video compression substantially reduces the digital image data by selectively transmitting and reconstructing only those frequencies that are most significant to the eye, thus achieving compression ratios unachievable by other technologies.

- Wavelets achieve a higher compression ratio and thus reduce storage and transmission costs-without sacrificing image quality.

- More cost-effective than MPEG, JPEG, or Motion JPEG.

- All wavelet solutions remain proprietary.

Compression Algorithms

Ratios of less than 3:1, preserves all data in a digitized image ensuring that the decompressed image on the receiving end is a pixel-for-pixel duplicate of the original.

- The file is permanently reduced, even when the file is uncompressed only part of the information is there. Improves transmission speeds and storage capabilities by reducing the amount of data to be transmitted, thus enabling higher compression ratios.

- Because these algorithms work by selectively discarding image data-the decompressed images are not exactly the same as the originals.

- Some lossy techniques introduce image artifacts such as blocking and noise, which at higher compression ratios have the potential to impair interpretation.

- Will produce artifact, significant data loss, or both at higher compression rations.

Wavelets Challenge MPEG

- MPEG and Wavelets are lossy compression

- Wavelets compress an image as a whole rather than in 8X8-pixel blocks.

- These blocks create the effect of "blocking" in JPEG/MPEG images.

- Wavelets eliminate "blocking" problem breaking images into frequency components.

- The algorithm looks for edges of objects and shades of gray and applies parameters to the different segments of the image so that some high-frequency data are discarded but other high-and low-frequency information is retained.

- JPEG limited to compression ratios of around 10:1; Wavelets up to 350:1

- At higher MPEG compression ratios the image quality begins to deteriorate

- More prevalent blocking feature

Wavelet V. MPEG

- MPEG and wavelet compression differences stem from their technological roots.

- MPEG, like JPEG, is based on the discrete cosine transform, a mathematical formula that separates images and video into 8X8-bit blocks and then compresses those blocks.

- Wavelets compress pixels in a continuous stream

- MPEG able to compress video at rates up to 100:1, but most implementations are between 30:1 and 60:1.

- Reason - Lower compression ratios lessen the effect of "pixelation" on image.

- Continuous pixel compression technique used by wavelets allows them to drop more unessential visual information and still retain the overall image.

Video is an accurate visual representation of some event. The visual representation is normally created through the use of a video camera. The camera converts the image it views to an analog voltage. This analog voltage (a.k.a. video signal) can be transmitted via wire (coax), or RF (Radio Frequency) to be remotely viewed or recorded. In the security industry video is used as a tool for viewing and recording events and as a deterrent to deviant behavior.

What is Compression and why is it needed?

To create digital video, the video signal generated by the video camera must be transformed from the analog voltage to a digital representation. This is accomplished through a process called Digital Signal Processing (DSP). Once the conversion from the analog signal to the Digital representation is complete, the video can be stored on typical computer media such as a hard drive. If the digital representation was stored exactly as converted, the data storage required would be in excess of 21 Megabytes per second. A typical CD can hold 670 Megabytes worth of data-which is less than one minute of video. This leads to the use of compression techniques. Compression is the technique of storing video images in less space by removing unnecessary data. Through the use of compression you can decrease the amount of storage required for the video images without losing much video quality. The CD that would originally hold less than one minute's worth of video can now hold a full-length movie when the video is compressed. In most cases it is desirable to remove as much information as possible without degrading the visual reproduction of the image.

What is Wavelet Compression?

Wavelet is the newest compression technology available on the consumer market. The following excerpt from Analog Devices Wavelet Signal Processing Technology white paper describes how the compression works.

Wavelet technology enables digital video to be compressed by removing all obvious redundancy and using only the areas which can be perceived by the human eye. The human eye cannot resolve high frequencies. This is analogous to humans not hearing high frequency sounds, a dog whistle is an example of such a product.

Wavelet technology filters the entire field or each frame at once. This approach results in no blocky artifacts or clunky images. Wavelets 'gracefully' degrade the picture quality as the compression rate increases. In addition, Wavelets enable precise bit rate or quality control of the images and a built in error resilient bit stream. The end result is a simple, efficient hardware implementation. The designer is able to control and to specify the compression rate for the video application under consideration. Broadcast rates are low ratio compression rates which are memory intensive, while security applications employ high compression rates to enable weeks to months of video to be stored .

Despite all the advantages of MPEG compression based on DCT, namely cheap, simple, satisfactory performance and wide acceptance, it is not without shortcomings. Since the image must be "blocked" there is no way to eliminate correlation across the block boundaries. As the data gets more difficult to compress noticeable and annoying artifacts will be produced. MPEG video is also based on motion estimation (temporal prediction). The basic premise of motion estimation is that in most cases, consecutive video frames will be similar except for changes induced by objects moving within the frame. In a near zero motion environment, say a parking garage, the format is fine. But for a mobile application, the situation is not so simple and even the best MPEG system will be taxed. Ideal frame data size of M/JPEG is up to 4K. Wavelet files easily handle up to 16K with no artifacts.

A cheap (low end) MPEG video board in real time will have around 160x120 resolution at best. Nobody is doing better than 352x240 at 30FPS without special hardware. Low end manufactures call their product real time in one sentence

then in another may claim 640x480 resolution, but you won't see that claim together. The MPEG makers may also call their compression ratios 200:1. Even here you have to be careful. The 200 to 1 claim may be "take a 640x480 image and sub-sample it to 320x240 (a 75% compression) then use MPEG to compress 50:1" Compare that to a wavelet system operating in real time with 720x486 resolution that has a ratio of 350:1. Then consider that full frame wavelet induces none of MPEG's artifacts.

Questions & Answers

Frequently Asked Questions:

What is Light?

How do we see color?

Can I use any type of cctv camera outdoors?

If I install a video capture board in a computer and find out that I need to add additional cameras that exceeds the board’s capacity, would I be able to resolve my dilemma by adding additional video capture boards?

Why are color cctv cameras better than Black and White (B/W), even though B/W cameras will work at lower light levels?

Why don't I need 30 frames per second video recording on all cameras?

What does an auto-iris lens do for me?

Do I need a fixed lens or a vari-focal lens?

How far from the computer can I place cameras?

Can I use wireless transmission from cameras to computer instead of cables?

Can I use my existing cameras mixed with new ones?

How many days/weeks of recording can I store?

What happens when my hard disk is full?

What is Light?

What do you call things that you see because they GIVE OFF their own light? Luminous. Luminous objects include the sun and an electric light bulb. The moon is not luminous, rather, moonlight happens when light from the sun bounces off the moon.

Dark things usually soak up the light that falls on them with very little light bouncing off (reflected). That is why darker objects are harder to see. Light things bounce (reflect) more light.

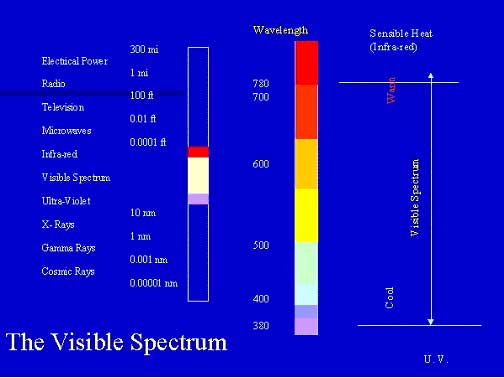

- Light is studied in physics. It is known as a spectrum.

- Light is a visually radiant energy.

- Light is an electromagnetic Radiation.

- Light has a wavelength of (380 nm – 780 nm)

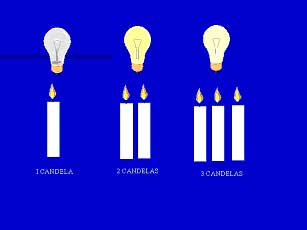

Light intensity is measured in by using candles as a measuring stick or candle power. It is measured in candelas.

Light illuminance is measured in Lux or footcandle.

How do we see color?

The reason you see a blue object is because only the blue color is reflected from that object. The object most probably has many other colors of the color spectrum in it. However, all other lights are absorbed by the object and the only reflected color to you is the blue light which your eyes pickup. In general your eyes see black and white below 10 Lux of light simply because there is not enough light for your eyes to distinguish any colors. Cameras are really not much different than human eyes. This is why an infrared (IR) camera comes in handy below 10 lux.

Can I use any type of CCTV camera outdoors?

Not really. Some mini-cameras are designed for outside use with waterproof cases (i.e bullet cameras). These cameras generally have fixed lenses. If you have a need for a Varifocal/Auto-Iris lenses the bullet won't work. In cold or tropical climates (with lots of rain) cameras should be mounted inside special housings. These housings are weatherproof and may contain a heater unit to take care of the freezing cold. For this reason cameras slated for inside mount should never be used outdoors without special housing.

If I install a video capture board in a computer and find out that I need to add additional cameras that exceeds the board's capacity, would I be able to resolve my dilemma by adding additional video capture boards?

Maybe! It really depends on the system and the board. Some systems can start with a board for 4 cameras and go to 16 on live recording (30 frames per second per camera). In general thought, the answer is No. Digital Video Recorders only recognize one video capture board.

Why are color CCTV cameras better than Black and White (B/W), even though B/W cameras will work at lower light levels?

It wasn't long ago that color cameras were considerably more expensive than B/W ones. You'd have to pay a premium to see things in color. Of course B/W cameras did come with a higher light sensitivity and resolution. Today, you can replace your big old bulky B/W cameras with smaller high-resolution cameras perhaps at a lower cost.

Why don't I need 30 frames per second video recording on all cameras?

The FBI needs 1 frame per second (fps) to convict. If you were robbing a bank, by the time you walk into a bank and approach a teller, it will probably take you 10 seconds. If the bank was recording you at a speed of 1.8 fps, in 10 seconds it would have had 18 frames or pictures of you walking in. This is more than enough for the FBI!

Now imagine that you manage a casino and with the stroke of his hand a con-artist can cheat you out of thousands of dollars in seconds! This is when "live" recording of 30 fps comes in handy. You take 30 pictures of the con-artist each second to allow you to decipher what he has done.

Most of the times you don't run into a casino type scenario therefore most applications do not need 30 fps or live recording. In fact a generally good speed to record is about 7.5 fps and no more!

What is compression and why is it necessary?

The video signal generated by a video camera must be transformed from the analog voltage to a digital representation. This process is called Digital Signal Processing (DSP). Video can now be stored on a typical hard drive.

However, if the digital representation was stored exactly as converted, the data storage required would be in excess of 21 Megabytes per second!! A typical CD can hold 670 Megabytes worth of data, less than 1 minute of Video! Compression becomes a necessity!

Video images are stored in less space by removing unnecessary data without loosing much video quality In this manner, t he CD that would originally hold less than minute's worth of video can now hold a full-length movie when the video is compressed.

What does an auto-iris lens do for me?

Light intensity changes during the day. We all know that and our eyes generally do a great job adjusting to it by through the iris. The same is true for cameras. That is cameras are also subject to light variations. Camera display and recordings are set for a certain level of brightness and contrast. When there the brightness or contrast level deviates from this set level the objects appear either over exposed or underexposed or just washed out. This is where auto-iris is very beneficial. Just like the human eye an auto-iris lens opens and closes its lens as light intensity is decreased or increased providing a stable video signal most of the time.

Do I need a fixed lens or a vari-focal lens?

If you are not sure what focal length you need to clearly see your field of view, it would be prudent to purchase a vari-focal lens. A good one to start with is a 3.5mm to 8 mm vari-focal lens.

How far from the computer can I place cameras?

Using Pelco's rule of thumb,

- 750 ft with RJ-59

- 1500 ft with RG-6

- 1800 ft with RG-11

Can I use wireless transmission from cameras to computer instead of cables?

Not to sound like an accountant but it really depends. If you have a clear line of sight with no obstacles, and a good range, then the answer is yes. However, when you transmit using wireless on a 2.4 GHz frequency, you don't have a very secure transmission. People who should not being seeing the video may end up viewing it! If both of these issues are not a problem, then the answer is yes.

2.4 GHz is high frequency can be transmitted for longer ranges in clear line of sight but only with out any obstacles such as objects walls or trees.

Can I use my existing cameras mixed with new ones?

The answer is yes. However, beware that such mixtures sometimes cause a synchronization problem or interference between one camera and another. Providing certain conditions are met. Even with the same color type, some older cameras and some newer cameras mixed together can cause problems because of very different image synchronizing systems. In this event, you can get interference between one camera image and another. Color cameras are more prone to this type of problem. Today's digital video recording products will handle a combination of color and B/W cameras.

How many days/weeks of recording can I store?

Again an accountant's answer. It depends. The following will have an impact on the length of your storage:

- The size of your hard drive

- The frame rate or speed at which your record

- The compression technique used by your DVR (MPEG, Wavelet)

- The resolution with which you record (320x240 or 640x480, etc.)

- Use of motion detection for recording

The good new is that hard drives are increasing in size and dropping in price. In addition, you have the ability to use multiple drives.

What happens when my hard disk is full?

Again, an accountant's answer. FIFO (First In, First Out). Meaning if you have the capacity to record for 10 days, on day 11 it will re-record on day 1, on day 12 it will re-record on day 2 and so on. If you have more than one drive it will sequence itself from one drive to the other until all drives are full before it starts the FIFO. (i.e. from C: to D: to E:) |